Instant Multi-modal Intelligence for Real-time Agentic Applications

Works for audio, video and text in a multi-modal manner

Try music.whissle.ai

Click to explore our music AI webapp

Accessible through and Integrated with

Real-Time Transcripts

Traditional ASR systems transcribe quickly but miss deeper meaning.

Contextual Intelligence

Multi-modal LLMs offer richer insights but can't keep up in real time

Whissle's Meta-aware VoiceAI models bridge that gap

It delivers transcripts, insights, and actionable information from audio or video—instantly and at scale

Don't take our word, try demo yourself!

Status: Disconnected

Latency: 0 ms for each chunk (800ms)

Extracting multi-modal semantics while transcribing in real-time.

Products and Services

Whissle Meta-1 Foundation Model

Foundation multi-modal VoiceAI model for real-time streams. Provided by Whissle API, default integrations and vendor platforms.

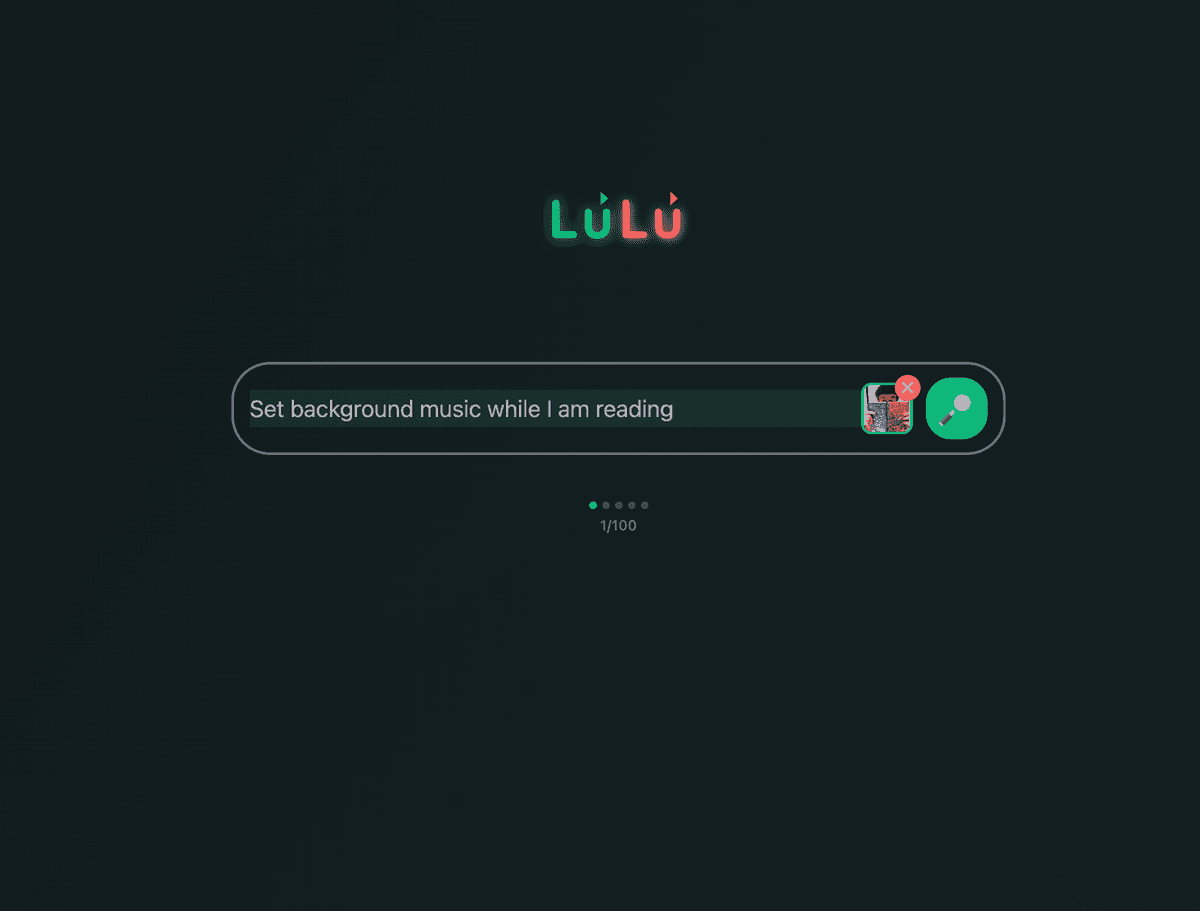

BOT - Lulu

Lulu is a multi-modal AI search agent that becomes your active and ambient companion.

Studio

Transform every multimedia into actionable insights with AI-powered tools