Best-in-Class Speech-to-Text API

Experience unparalleled accuracy and speed with our state-of-the-art Speech-to-Text solutions.

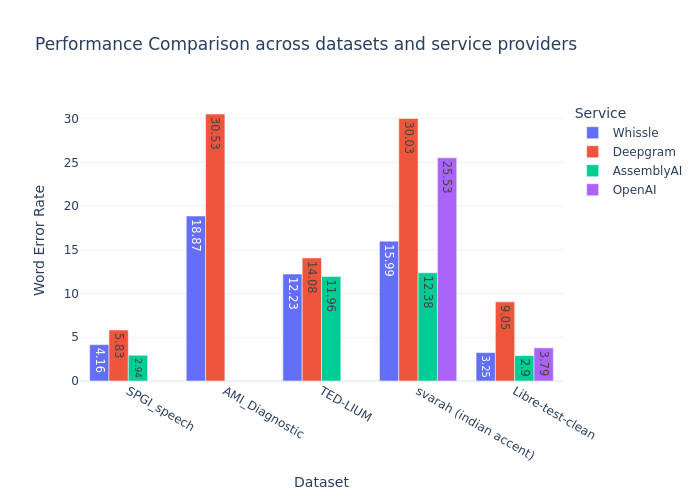

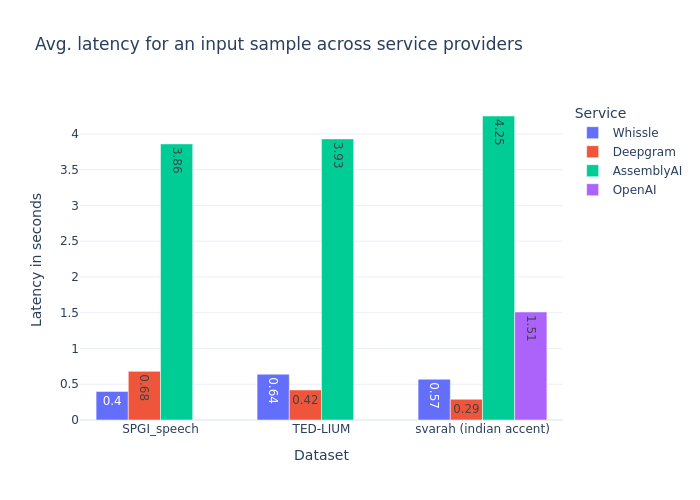

Our Speech-to-Text API surpasses competitors in both accuracy and processing speed. Select the model that aligns perfectly with your specific requirements to ensure optimal performance.

Available Models

- en-US-0.6b

- em-ea-1.1b

- en-US-NER

- en-US-300m

- hi-IN-NER

- ia-IN-NER

- ru-RU-300m

- en-US-IoT-NER

Code Examples

import requests

# Function to list available ASR models

def list_asr_models(auth_token):

url = 'https://api.whissle.ai/v1/list-asr-models'

headers = {'Authorization': f'Bearer {auth_token}'}

response = requests.get(url, headers=headers)

return response.json() if response.status_code == 200 else response.text

# Function to transcribe audio using a specific ASR model

def transcribe_audio(auth_token, model_name, audio_path):

url = f'https://api.whissle.ai/v1/conversation/STT?model_name={model_name}&auth_token={auth_token}'

files = {'audio': open(audio_path, 'rb')}

response = requests.post(url, files=files)

return response.json() if response.status_code == 200 else response.text

# Example usage

if __name__ == "__main__":

AUTH_TOKEN = "your_auth_token_here" # Replace with your token

print("Available Models:", list_asr_models(AUTH_TOKEN))

transcription = transcribe_audio(AUTH_TOKEN, "en-US-0.6b", "path/to/audio.wav")

print("Transcription:", transcription)